Expected Number of Steps of a Markov Process Math, CS, Data

If we want to find the expected number of steps until “completion” of a Markov process, often we can use conditioning to get a sufficient system of equations. Each state provides one equation. Let $T_{S_i}$ be a random variable corresponding to the number of steps required to reach completion, starting from state $S_i$. Finally, let $p_{i,j}$ be the transition probability from $S_i$ to $S_j$.

Example

Here’s an example of this technique. This question comes from an old qualifying exam:

Hidden inside each box of Primate brand breakfast cereal is a small plastic figure of an animal: an ape, a baboon, or a chimp. Suppose a fraction $\alpha$ of the very large population of cereal boxes contain apes, a fraction $\beta$ contain baboons, and a fraction $\gamma$ contain chimps. Find the expected number of boxes you need to buy before you have at least one figure of each type.

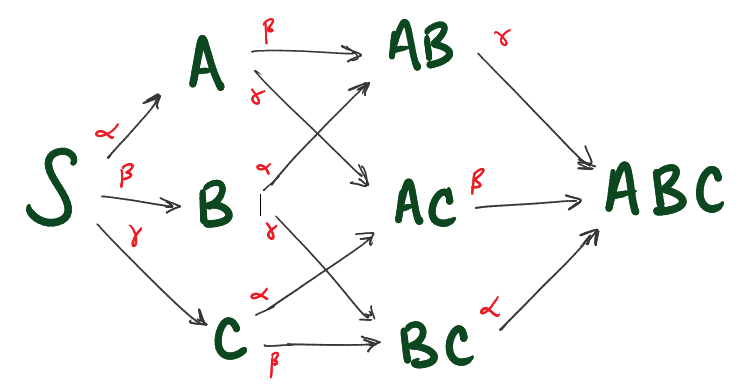

The situation can be modelled by a Markov process corresponding to the following diagram. $S$ represents the starting state. To simplify the picture, arrows from a state to itself are not drawn but are implied to account for any “missing” probability.

Let $E_X(Y)$ denote the expected number of transitions required to reach state $Y$ from state $X$. Our goal is to find $E_S(ABC)$. By looking at the diagram and conditioning on the next step, we can derive the following recurrence relationships.

And by recognizing that the final transition to $ABC$ is a Geometric process, we realize that

Putting it all together,

blog comments powered by Disqus