Bivariate Normal Expectation of Product of Squares Math, CS, Data

Assume $X$ and $Y$ are each standard normal, and they have correlation coefficient $\rho$. Find the expectation of $X^2Y^2$.

First, consider the properties of $Y$ for a fixed $X$. In such a case, we have a simple linear model, and we know that $\E [Y \vert X] = \rho X$. Furthermore, $\rho^2$ represents the proportion of variation in $Y$ that is explained by $X$, so the proportion unexplained is

Also, note that $\E X^4 = 3$. This can be established by taking the fourth derivative of the standard normal moment generating function $e^{t^2/2}$ and evaluating it at $t=0$.

Now, we can use iterated expectation to derive the result.

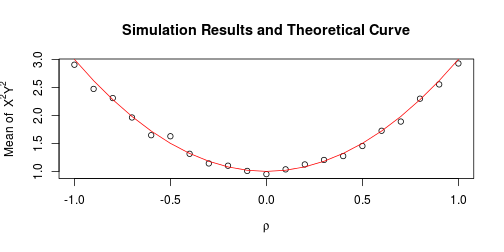

A simulation confirms our conclusion.

library(MASS)

n <- 10000

mu <- c(0, 0)

rho <- seq(-1, 1, by = 0.1)

results <- rep(NA, length(rho))

for (i in 1:length(rho)) {

Sigma <- matrix(c(1, rho[i], rho[i], 1), nrow = 2)

m <- mvrnorm(n, mu, Sigma)

results[i] <- mean(m[, 1]^2 * m[, 2]^2)

}

plot(rho, results, ylab = expression(paste("Mean of ", X^2, Y^2)),

main = "Simulation Results and Theoretical Curve", xlab = expression(rho))

lines(rho, 2 * rho^2 + 1, col = 2)

Generalization

We can easily extend this result to the more general case of nonstandard normality. Assume $A \sim N(\mu_A, \sigma_A^2)$ and $B \sim N(\mu_B, \sigma_B^2)$ and they have correlation coefficient $\rho$. Then we can equivalently reexpress them as $A = \mu_A + \sigma_A X$ and $B = \mu_B + \sigma_B Y$ for some standard normal $X$ and $Y$ with correlation $\rho$.

We will also need to find a couple more expectations along the way.

And

Likewise, by symmetry, $\E XY^2 = 0$. Finally, we have all the pieces we need to attack the problem.

blog comments powered by Disqus